In this blog we will learn how to setup a Hadoop Fully Distributed Mode Cluster with one Namenode (master) and 2 Datanodes (slaves) using your Windows 64 bit PC or laptop

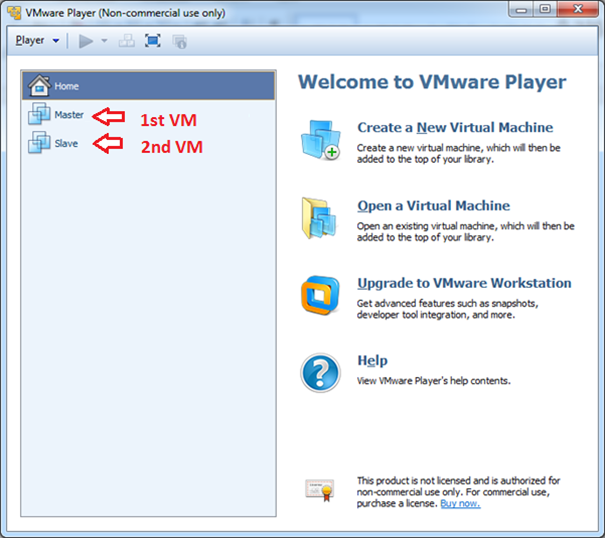

1. Towards this we need to have 2 Virtual Machines

(VM) in the same windows system ready, on which Hadoop should be installed in

Pseudo Distributed Mode.

For details on how to setup

Hadoop Pseudo Distributed Mode cluster, please refer my blog http://hadooppseudomode.blogspot.in/

. In this blog I have recorded detailed steps with supported screenshots

to install and setup Hadoop cluster in a Pseudo Distributed Mode using your

Windows 64 bit PC or laptop

Note: Using

VMware you can have 2 VM’s running simultaneously. To do that, open VMware player

by double clicking its desktop icon and select the 1st VM and play.

Then go back to your windows desktop and double click on the VMware desktop

icon to initiate it’s another session and play the 2nd VM

2. I will use the 1st virtual machine

(Named: Master) to work both as Namenode and Datanode. The 2nd

virtual machine (Named: Slave) will be used as Datanode.

Note: The names

given to these virtual machines in the VMware Player is only for identification

purpose and it does not affect the cluster setup. You are free to name your 2

VM as per your preference

3. Play both the VM’s (Master and Slave system) and

login using hduser account

4. We need Stop all the Hadoop services in both the

Master and Slave system. From the Master system type the below command and then

login to the Salve system and run the same command

/home/hduser/hadoop/bin/stop-all.sh

5. Note down the IP Address of both the VM’s. For

that open its LXTerminal and type command

ifconfig

6. My Master system (1st VM) IP address

is 192.168.xx.xx2 and my Slave system (2nd VM) IP address is 192.168.xx.xx3

7. Login to the Master system (1st VM).

Open its LXTerminal. Type the below command to check if the Slave system (2nd

VM) is in the same network and is accessible.

ping -c 5 <<ip address of the Slave system>>

ping -c 5 192.168.xx.xx3

Next step is to edit

the /etc/hosts file in which we will put the IPs and Name of each system in the

cluster. This has to be done in both the Master (1st VM) and Slave

(2nd VM) systems

8. From Master

system (1st VM) - Open LXTerminal and type the below command

sudo leafpad /etc/hosts

Enter hduser password and hosts

file will open

In the hosts file add the ip

address and name of both the systems (master and slave) right below the ubuntu

system name. The IP address and its name should be tab separated.

192.168.xx.x2 master

192.168.xx.x3 slave

9. From Slave

system (2nd VM) – Repeat Step No 8 as listed above

Next step is to give access

to Master system to gain a password less shell entry to Slave System. That is,

from the Master system you should be able to access the shell of the Slave

system without the need to password. This is achieved by sharing Master system

public key with the Slave system

10. From Master system (1st VM)

– Type the following command

ssh-copy-id -i /home/hduser/.ssh/id_rsa.pub hduser@slave

You will be prompted for

confirmation. Type yes and enter. And then for password. Give password of

hduser.

Slave added to the list of know

hosts confirmation message would show on screen

Now enter below command and you

will not be prompted for any password which confirms the keys are shared

properly

ssh slave

ssh slave

Next step is to

record the Namenode systems name/ipaddress in the hadoop’s /conf/masters file. This

has to be done in both the Master (1st VM) and Slave (2nd

VM) systems

11. From

Master system (1st VM) – Type the following command to open

masters file

leafpad /home/hduser/hadoop/conf/masters

Enter following values and save

master

12. From

Slave system (2nd VM) – Repeat Step 11 as listed above

Next we have to list

the names of the salves system in the hadoop’s /conf/slaves file. This is

needed only in the Master (1st VM) system

13. In the

Master system (1st VM) – Type the following command to open

slaves file

leafpad /home/hduser/hadoop/conf/slaves

Enter following values

master

slave

Next we will have to record

the Namenode system’s name in core-site.xml file and the Job tracker system

name in mapred-site.xml file. This has to be done in both the Master (1st

VM) and Slave (2nd VM) systems

14. From

Master system (1st VM) – Type the following command to open core-site.xml

file

leafpad /home/hduser/hadoop/conf/core-site.xml

Replace the word localhost:54310 with master:54310

15 From

Master system (1st VM) – Type the following command to open

mapred-site.xml file

leafpad /home/hduser/hadoop/conf/mapred-site.xml

Replace the word localhost:54311 with master:54311

16. From Slave

system (2st VM) – Repeat Step 14 and 15 as listed above and save

the changes made to core -site.xml and mapred-site.xml files

17. In the

Master system (1st VM) – Type the following command to open

hdfs-site.xml file. Here we will change the replication factor to 2

leafpad /home/hduser/hadoop/conf/hdfs-site.xml

Since the Salve

system was initially configured to function as Pseudo Mode Cluster, its

datanode will still be referring the Namenode namespaceID of the same system

instead of using the Namenode namespaceID of the master system (new Namenode).

The namespaceID is stored in the VERSION file. This needs to be changed only in

the Slave system as the Master system still continues to work both as master

and slave

18. From Master

system (1st VM) – We need to find out the namespaceID of the

Namenode. For that type the following command to open VERSION file

sudo leafpad /home/hduser/hadoop_tmp_files/dfs/data/current/VERSION

Just Note down the namespaceid.

My Master systems namespaceID=306023161

Note: The VERSION file is located inside

hadoop_tmp_files folder whose path is specified in the

/hadoop/bin/core-site.xml file. If you have changed the storage path, pass the

same path in the above command

19. From Slave

system (2st VM) – We need to change the namespaceID which is

shared by the namenode with the datanode. For that, type the following command

to open VERSION file

sudo leafpad /home/hduser/hadoop_tmp_files/dfs/data/current/VERSION

Change the namespaceID to what

you have noted down from the previous step. In my example I will change it to

namespaceID=306023161

Note: The VERSION file is located inside

hadoop_tmp_files folder whose path is specified in the

/hadoop/bin/core-site.xml file. If you have changed the storage path, pass the

same path in the above command

20. From Master

system (1st VM) – Next step is to Start all hadoop services

/home/hduser/hadoop/bin/start-all.sh

21 From

Master system (1st VM) – Type jps command to check if the below

listed java processes are running

namenode

secondary namenode

datanode

jobtracker

tasktracker

22. From Slave

system (2nd VM) – Type jsp command to check if the below listed java

processes are running

tasktracker

datanode

23 From

system (1st VM) – Open Chrome browser in your lubuntu Master machine

and browse for Localhost:50070. Number

of Live nodes would show up as 2. This confirms that you have setup a fully

distributed Hadoop Cluster with 2 Datanodes.

Hope this helped. Do reach out to me if you have any

questions.

If you found this blog useful, please convey your thanks by

posting your comments

Nice and good article. It is very useful for me to learn and understand easily. Thanks for sharing your valuable information and time. Please keep updating Hadoop administration Online Training

ReplyDelete